Fuzzy computing

Rethinking CS fundamentals through a "fuzzy" LLM lens

A few friends nudged me to finally restart my blog after a long hiatus, so here we go. Planning to use this space just to riff on new ideas I’m thinking about and explore what an AI future might look like.

The spark for this post was a discussion with James Cham, who said:

“My guess is the product that runs lots of cron jobs on my behalf will be very sticky and LLMs make cron jobs just flexible enough and easy to create to really proliferate.”

I’ve been thinking about this recently (in conversations with Henrik Berggren), and I agree! (If you don’t know what a “cron” is, I explain below.)

One of my more productive thought experiments since ChatGPT's launch 2y ago has been to take some fundamental Computer Science concept and add “fuzzy” to it — how does that change what you can do with it, or who can use it? How might computing work if it was fuzzy instead of deterministic and rules-based?

I’ll give you 3 recent examples to illustrate what I mean.

Example 1: “Fuzzy compilers”

A compiler is a fundamental concept in computer science: a program that translates human-written source code (in a language like C or JavaScript) into a lower-level program that can be executed on a specific type of device.

So what would a “fuzzy compiler” do?

Instead of formal programming languages, it would take a fuzzy human description (high-level, informal, ambiguous) of some type of artifact you want to create, and make it for you.

For example, Midjourney is a natural language “visual compiler” that produces images from text prompts (it even has various “compiler flags” and commands you can operate from within Discord):

Prototyping tools like v0.dev, bolt.new, or lovable.dev are “fuzzy frontend compilers”: they take natural language descriptions of a web app or marketing page and “compile” them to a working, deployable site.

Similarly, my TexSandbox experiment is a “fuzzy LaTeX compiler”: it takes an English (or French or Japanese…) description of math equations or diagrams you want to create, or a picture of your handwriting, and translates it to LaTeX code that can be rendered as a PDF.

(Amusingly, LaTeX itself is a macro compiler from 1984 built on Donald Knuth’s TeX compiler from 1978, which is itself compiled from an esoteric flavor of Pascal to C to various executables — it’s a tower of compilers all the way down…)

Key question: What’s your “compile target”?

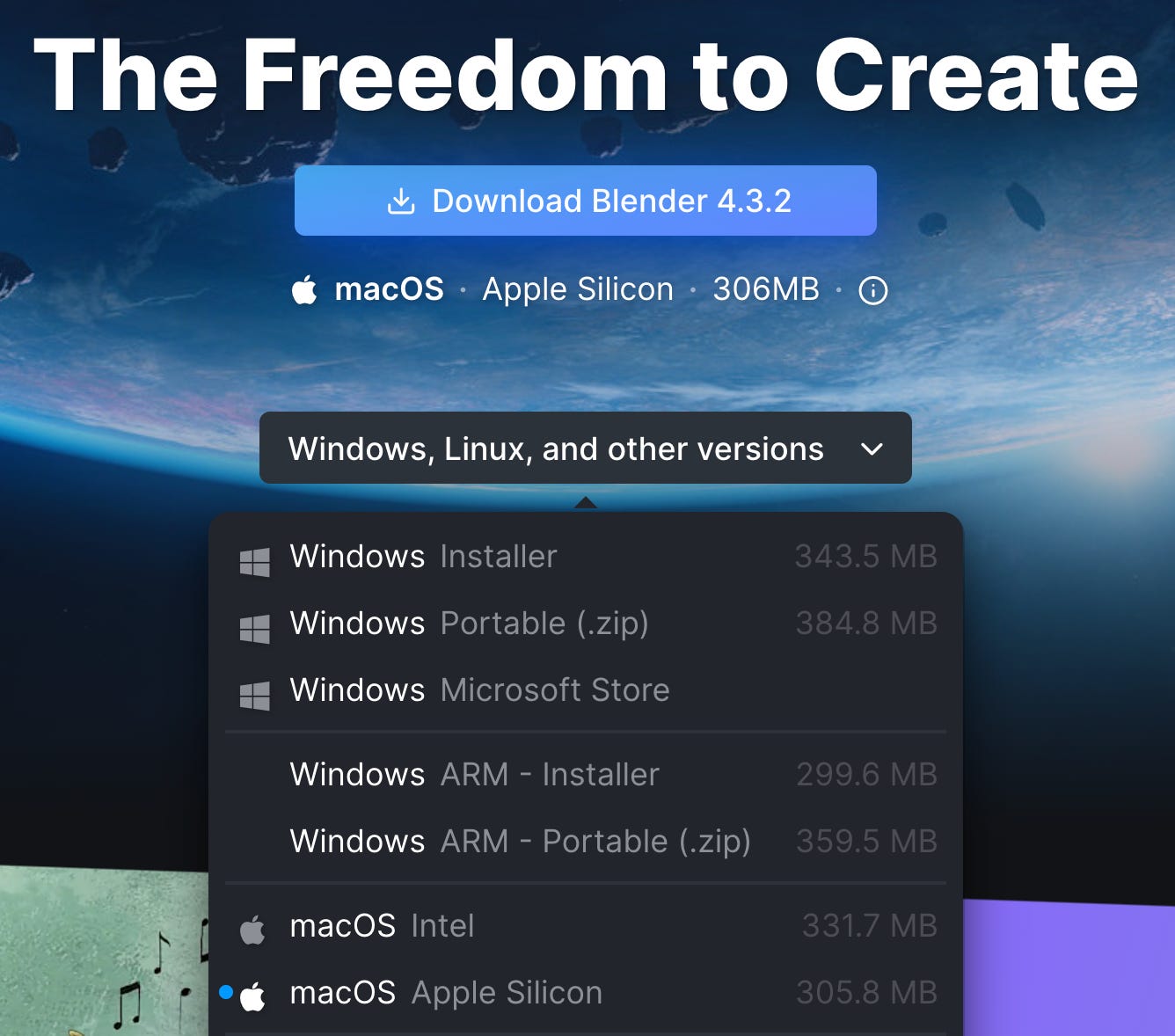

With a traditional compiler, you take a high-level description of desired behavior (some source code) and compile it to a specific “target” architecture.

For example, you might compile source code written for Golang version 1.23 to run on macOS using Apple Silicon CPUs, or for Windows running on x86 64-bit CPUs.

This compile target determines what kind of output artifact (or executable) the compiler will create from your inputs. If you pick the wrong target for your machine, the app won’t know how to run.

Similarly, choosing your “fuzzy compile target” is a key choice in building LLM-powered apps.

For example, v0.dev uses a very specific frontend tech stack as its “compile target” (React, shadcn/ui components, lucide icons, tailwind styling, and a bunch of other stuff). Claude or bolt.new use similar (but slightly different) compile targets for their frontend artifacts.

Similarly, when handling data analysis tasks, AI assistants have made different choices for their compile targets: Claude outputs JavaScript that runs in your browser (using lodash), while ChatGPT typically generates Python/pandas code that runs either in your browser (in pyodide) or on their servers. Python has a much richer ecosystem for data manipulation, while JavaScript is far richer for interactive charts, so this choice has trade-offs.

Notice how the choice of compile target can have a dramatic effect on the user experience and capabilities of LLM apps, and what you can do with the artifacts they create (where can I run this thing?).

It’s also one reason LLMs like ChatGPT can be so difficult to figure out, because the available “compile targets” (and their implications) are usually totally undocumented and vary across different models in mysterious and invisible ways.

If you’re building an application using LLMs, choosing your “compile target” is one of the most important design decisions, so choose your target wisely!

Example 2: “Fuzzy MapReduce”

I stumbled into this example in December, while building a little LLM-powered data pipeline for a friend at a startup.

He wanted to merge 4 CSVs of business listings from different sources into one master CSV. Sounds simple, right?

The problem was that business names and addresses used slightly different formats in different source files. Is “Holden Brothers HVAC” in “Boulder, CO” the same business as “HOLDEN BROS HVAC AND REFRIGERATION INC.” in zip code 80301? That sort of thing, but times 500k decisions.

This is a very common type of real-world data workflow (sometimes called “fuzzy merging” or “entity resolution”). Most data in the world is like this — messy, unstructured, full of typos and html encoding errors and various other annoyances and edge cases.

What to do?

As a “fuzzy coder”, I used Claude to write a Python data pipeline that called Claude’s API in batch mode, using Anthropic’s cheapest model (the older Haiku).

Running this pipeline was like coordinating an army of 100k interns, each tasked with a simple comparison: “Here are two business listings - are they the same company? Follow these guidelines...”

After refactoring this code the 3rd time, I realized the pattern was essentially a MapReduce, one of those good/old CS ideas pioneered by Google and others before them.

If MapReduce is a useful computing pattern, why not “Fuzzy MapReduce”? How would that work?

It would let you build data pipelines, but instead of writing Java code (or whatever), you could describe some of the desired behavior with “fuzzy” English instructions like you might write up for an intern:

“filter out any records not in California”

“rewrite addresses in a standard format like this example:…”

“merge two records only if the following are true:…”

Instead of using 1 big, expensive model, why not use an army of small, cheap, dumb (but still useful) models in parallel. Fuzzy MapReduce!

Fuzzy coding like this is quite different than the traditional kind. Instead of deterministic jobs and unit tests, it really felt more like managing a large team of capable (but also stochastic, and somewhat gullible) interns and reviewing their work. After running a job, I’d look through tables of results and see if they looked right, only to realize my intern instructions were too ambiguous or didn’t anticipate certain weird edge cases in the data. So I’d go back and clarify instructions and loop again.

One clear learning for me was that having a good human review workflow to iteratively decide what “good” looks like (call it “eval” if you like) is often the limiting factor in fuzzy computing, and a new muscle many software teams haven’t built yet.

Example 3: “Fuzzy Crons”

If you aren’t a software engineer, you probably have no idea what a “cron” is. It’s just a fancy way to say “run this job on a recurring schedule”, in computer-speak.

It’s an oldie-but-goodie idea from Computer Science, that dates back to UNIX systems from the 1970s.

It’s super useful! But it also uses an arcane syntax I can never remember to specify when you want to run a job — every 5 minutes, every Monday at 8am, every 15th day of the month, that sort of thing.

Since crons are very useful and ubiquitous in computing, how would “fuzzy crons” work?

Well, instead of writing in a precise but cryptic syntax like this:

1 0 * * * echo hello worldyou could instead describe and schedule a task in more intuitive language, like this:

keep an eye on flights from DEN to airports within 1h of west orange nj in september. no red eyes or flights before 8am. if you see any big price drops send me an email with links to the cheapest price

No, you can’t have my credit card, and I certainly don’t trust you to actually book anything for me 😂, just do this boring repetitive research task every day and let me know if you can save me money.

Or if I was into stock picking (I’m not), how about:

check Apple's website every day after market close. whenever you see any new quarterly earnings posts, read the financial statements and 10-Q filings and send me a 1-page tl;dr. also monitor my stock portfolio in yahoo finance and do the same for any other stocks you find there. kthxbye

Or if I was a CSM at a B2B startup, how about something like this:

monitor all the accounts assigned to me in Salesforce and watch for any upcoming client check-in or QBR meetings on my calendar

every monday at 8am add a meeting to my calendar linking to draft qbr decks for every client meeting I have that week, following this template

always pull the latest usage #s from Looker and customer ticket history from Zendesk, and btw be sure to check the client’s website and use their most recent logo and brand colors throughout. thanks ur the best 😘

A CSM once told me it took them 6 hours of work to make every deck like this, and they hated that part of their job.

Where else could fuzzy crons free up humans from repetitive tedious tasks like this, so they can focus more on the uniquely human or more fulfilling parts of their job?

From Chat → Tools → Tasks → Crons?

ChatGPT was born in 2022 as “Chat” — text messages in, text messages out.

In 2023-24, it learned to use “tools” (aka function calling), and to see, hear, speak, draw, browse, and code (multi-modal inputs and outputs).

So far, in the first month of 2025, it’s added the ability to create and schedule tasks, control a web browser, and perform deeper research to synthesize many sources into a multipage “report” with citations. AI is becoming both more capable and more asynchronous.

In particular, the Tasks feature looks exactly like an early version of “fuzzy crons”!

I suspect the horizontal AI products (from OpenAI, Google, MS, etc) will create generic versions of these workflows, but some of the most valuable applications will likely be vertical solutions deeply integrated into professional workflows - like specialized tools for developers, lawyers, or financial analysts.

As AI workflows evolve from real-time chat to asynchronous tasks, and from conversation to automation, we'll need new ways to manage and review fuzzy processes, with key primitives like notifications or review workflows.

The future might look less like chatting with an AI assistant and more like coordinating an army of AI interns running your fuzzy tasks, crons, and pipelines while you sleep.

Other fuzzy patterns?

We're already seeing other “fuzzy” patterns emerge: eg Command Palettes, originally a hybrid of CLI and GUI interfaces, have evolved into “Fuzzy Command Palettes” that accept natural language input, across a range of apps.

What other foundational CS concepts are ripe for a fuzzier evolution? CLIs? Schedulers? State machines? If you have ideas about how rigid systems could become more flexible, I'd love to hear about them!

Fuzzy computing as personal computing

One AI theme that excites me is the possibility for more accessible personal computing tailored to the specific needs of more people.

Traditional coding offers immense power to automate and solve problems. But it has two major limitations:

It’s ⛔ inaccessible to perhaps 99% of the people in the world.

It’s 💰 expensive to write, debug, and maintain.

The high cost of code means many tasks never get automated that might save someone time or help them be more productive. Often the person who best understands a specific problem isn’t a coder, or even if they are, the cost of doing so is too high to be worth the effort.

But fuzzy computing could fundamentally change this equation. If automation becomes cheaper to create and maintain, and describable in natural language instead of “code”, the cost/benefit analysis could shift dramatically.

And what if we could make truly personal computing more accessible to more people — scientists, lawyers, designers, journalists, architects, support reps, and so on — in the language they already speak, not by learning a specialized “coding” language designed for computers but one evolved for human communication?

How many people get scared off by their first encounter with “code” who would otherwise benefit from creating their own personal tools and workflows and automations that best serve their needs?

While I do worry about AI's potential for job displacement, fuzzy computing might also democratize automation - giving more people the ability to create their own tools and shape technology to their specific needs, rather than the other way around.

Appendix

(Warning: Only for the 🤓 Super Nerds)

If you’ve ever heard of Category Theory, one way to think about a traditional “compiler” is as a functor from the category of valid “Source Code” to the category of “Executable Programs”.

(In fact, it’s a family of functors — one for each source language/version to each target architecture. There are also interesting natural transformations here, such as C++ optimization flags, or changing a TypeScript target from ES5 to ES6 — these are natural transformations between compiler functors that preserve the semantic meaning and behavior of a program.)

What then is an LLM-powered “fuzzy compiler” in this view?

I think of it as a functor from the (fuzzy) category of User Intents (i.e. natural language instructions in various human languages, including ambiguity and typos) to some specific domain of Artifacts, which could be many things, such as:

1024x1024px PNGs (for DALL-E or Midjourney)

Python 3.12 programs to be run in pyodide/wasm (ChatGPT), or React/Next.js apps using a specific choice of tech stack (v0.dev)

A PRD using my team’s most recent template (ChatPRD)

Redlines of .docx legal contracts (Harvey)

…and 1000 other possible domains…

While traditional compilers require precise, unambiguous source inputs, fuzzy compilers accept a much more fluid category of user intents - where ambiguity, context, and emotional expression are features, not bugs. The “grammar” of valid inputs is intuitive, expressive, and natural, focused on semantic meaning or intent rather than rigid syntax.

Fuzzy compilers also expand what the target category can be. They can produce intuitive artifacts that traditional Software 1.0 methods could never create - from visual designs to legal redlines to product roadmaps.

Fuzzy compilers make weaker guarantees than traditional ones (eg they're non-deterministic!), but this trade-off enables a profound shift: from computation that requires humans to think like machines, to computation that allows machines to understand human intent. This opens up many new adjacent possibilities for more intuitive, accessible, and democratized personal computing.

You guys aren't about decomposing them to "smaller" fuzzy compilers? Or replacing parts inside the fuzzy compilers by deterministic bits? Or turning to implict machine learning, where for example ImplicitLayers/NeuralODE's/DEQ's are becoming factors inside a factor graph giving an implicit representation of an LLM?